- Google-free Android on Fairphone, again

- Ask your software developer about software complexity

- New Fairphone2 camera module - before and after pictures

- My email to European MPs about the coming copyright reform

- A digital home audio/video setup for less than 300 EUR

- Explainable algorithms? Don't count on it.

- Dissertation finally defended

- My favourite five slides from DockerCon Europe 2014

- Talk: Getting a first grip on doing large computations at CWI

- The unintended major purpose of this website and its dirty secret

- The EU is measuring innovation badly

- Support your heroes by funding their legal defense!

I bought a Fairphone3. I was hoping to quickly receive a Fairphone-supported Android again, which is Google-free. You know ? so there is not this giant cooperation getting to keep tabs on where I am at all times and who I talk/write to. Plus, it doesn't really feel like my phone, if the Google account is "connected" on there.

Anyway, Fairphone didn't actually want to go to the same trouble again as they did with the Fairphone2, so I had to wait. And now, for the first time, I actually "rooted" my phone. It's now mine again!

I used the /e/ foundation's version of LineageOS, which has a great mission (an actual Google-free phone[1]), a good tutorial for my phone, a nice roadmap and they support my exact phone model.

After two weeks of using the new OS and apps, I can say I am quite happy!

How I'm using it

Here are some points to make on what the /e/+LineageOS approach is and how I'm using it:

- You don't need an /e/ account. It's only a service they offer, should some peple like to backup mails and contacts between phones or computers. I have Nextcloud for that.

- The /e/ foundation added the MagicEarth app, so there is some decent Navigation based on OpenStreetMaps data. I have to say it works quite well!

- /e/ comes with their own app store, which I like. It has apks from popular non-free apps, should you want/need them, like Whatsapp or Netflix. The source for these apk files is cleanapks.org. That is still a hot topic in the free software world. You can install other stores of course, like FDroid (completely free) or Aurora (niche apps from Play Store). Updating apps works well in all of those.

- They don't give you too much control over what's on your home screen (app launchers, widgets). You can't delete the standard ones, so you have less space for the ones you care about. The solution is pretty cool, once you know it: You can install a different Launcher. I used Launcher<3. Now I have space for the two widgets I love: AgendaWidget (installable from the app store) and TaskWidget (comes with openTasks).

- The old gang for supporting my Nextcloud integration on Android is working better each time I set up a phone: Nextcloud app + DAVx5 + ICSx5 + OpenTasks

- Other notable base necesseties, while we're at it: AnySoftKey, KeepassXC, WebTube

What is the future for /e/? Apparently, they're working on creating a more aligned Look&Feel, so that standard apps they offer look more alike. I'd like that. For example, the Mail app is a fork of K-9, but got a clean modern look. However, the version of the fork is rather outdated, I hope they catch up.

Drawbacks

I don't want to leave the impression that not going with the mainstream is all roses. It is actually a small sacrifice - next to doing your own OS installation (which is actually not really hard but it will take you at least an hour, and then you start with installing and configuring apps).

Here is a list of annoyances I have so far:

-

Typing with auto- correction is okay but I know it's a bit worse than cutting edge. More mistakes slip in.

-

Webtube's full-screen mode is not full screen.

-

MicroG keeps crashing and I don't know what that means for my OS functionality

-

My phone would like to connect to my ChromeCast but it can't.

-

Text-to-speech is a while away but somebody's working on it.

Actually, all or most of these issues are being worked on. It's a matter of time.

Living somewhat free of giant corporations means you'll be living in the slight past. Maybe two or three years beind. It's really not that bad, but I can see that not everyone feels like they can do it.

Trust

A big issue in this whole story, and also a complex one, is trust ? we are here because we don't trust Google or Apple to be good stewards of all our data for all time. But we can't do it alone. We have to trust the makers of our alternative tooling to some degree, as well. In this situation, I am putting various levels of trust in the makers of LineageOS, the /e/ foundation and (by extension) cleanapk.org. But I'm also trusting app makers like the guys at KeepassXC. Some of the trust here is easier to maintain with the monopolists ? we're trusting Google and Apple (at the moment) to have a good eye on the security aspect of their app store.

It's complicated.

[1] /e/ uses MicroG as a free and open-source replacement for Google Play Services, and Mozilla Location Service for geolocation. One of their goals is to support beign Google-free for non-technical users. They even offer smartphones (like the FP3) pre-installed for sale.

Software is a peculiar thing. It is made by engineers, but how to estimate costs and how to understand the end product is very different from other engineering sectors. This difference comes from the level of complexity that comes with even reasonably-sized software. The digital age just began. It might be a while until we can estimate and understand software projects, if ever.

This paragraph above is true and well-understood in the software industry by everyone who is paying attention. I refer you to two recently-published and very well-written articles about this. Every layman should be able to read them. They look at this problem from two different angles - the estimation of what reasonable costs and the expected stability of delivered software should be, and the understandability of software, especially when data science and machine learning are being used.

The Age Of Invisible Disasters:

Nobody dies on a failing CRM project. At least not so far as I know. So why should you care? Everyone still gets paid. You should care because within government, the only sector where failure gets any real coverage, I count £20b in failed IT projects over the last decade alone. Because within publicly quoted companies such failures are a bonfire of shareholder value. Because the ability to deliver technology projects will become the determining survival factor for many companies. Finally, you should care because if there is a skills shortage (or as I prefer to say, a talent shortage) and talent is tied-up with moribund IT projects, the lost opportunity cost to business is vast.

The terrifying, hidden reality of Ridiculously Complicated Algorithms:

If, as Jure suspects, machine judgement will become measurably better than human judgement for important decisions, the argument for using it will only grow stronger. And somewhere in that gap between inputs and outputs – the actual decision making part of the process itself – is something that can shape our lives in meaningful ways yet has become less and less understandable.

So - both estimations and understandability of software are currently really poor. That makes this a weird time to be involved in the software sector. It makes it exciting to predict the near future. But also, it makes it highly costly for businesses to do any software development. It isn't, however, stopping the digital advancement. Information is power, and who gets it right, can thrive.

Are you getting this angle from the software developers or software companies you talk to? I'm guessing the answer is no. I'm guessing they are telling you that your software project will certainly succeed, that maintenance will be no big deal, and so on. I get it, as framing the planning of software projects like I and the two articles above did, might not be the best marketing approach. This is business, after all.

But if you want to know if your software developer actually understands the situation, ask them about software complexity. Put the finger where it hurts. They either should somewhat admit to this weird state of their sector, and maybe tell you how they try to keep complexity at bay, or they are overselling and you should politely decline their services.

Fairphone is making more steps to its goal of modular phones which are used longer (instead of, for instance, buying a new phone because the camera is a bit outdated). I have a (almost) two-year old Fairphone2, and making photos and videos is one of its most important functions. Thus, I bought the new camera module.

The new module upgrades from 8 to 12 megapixels, but there is much more to a camera than the pixel count.

Here I'll show a few before and after pictures for any Fairphone 2 owners who are interested. I'll show original first, then the new camera.

First picture: Objects in fall afternoon sunlight, no special instructions

The original camera did make some odd choices when making the picture, especially with lightning. The new module got it much better. This is also a reminder that a camera is hardware, but it needs software making good decisions.

Second picture: Objects in fall afternoon sunlight, focus on the sunflower

When I focused on the sunflower, the original camera made a better picture. The new camera, however, gets more out of the scene. The table isn't as dark, and the colours are generally more natural.

Third picture: Freezer magnets on slightly reflective surface

It's less obvious, but I think the new camera gets details like natural colours and reflections noticably better.

I also made a comparison picture in a quite dark setting, and both cameras made really different choices. The old one caught too much light, the new one slightly too little. I installed OpenCam to see what difference another camera app made, and that result was much better.

I recommend getting the new camera if photos are important to you (e.g. you make 90% of the family pictures with your Fairphone2 and you believe 45 EUR are worth catching scenes slightly more naturally) and also to not underestimate what a different app could do, as well.

I was inspired by a call to write members of the European Parliament about upcoming legislation. Christian Engström writes in this post that self-written emails have the greatest effect, so I picked the two topics still being discussed and wrote about those.

Here is the text of my email:

Dear delegate of the JURI committee,

I am writing to you from The Netherlands as a citizen who is very concerned about internet legislation. As a computer programmer and father of two, I am following the current debate about the future of copyright with great interest.

I want to focus on two articles which I find so troubling that I am writing you today. These are the topic I discuss among my peers and which I raise awareness of: Article 3 (copyright exception for the modern research method Text and Data Mining) Article 13 (Automatic upload filtering).

Article 3:

I urge you to not only give the right to mine data to "researchers".

* We need journalists to have this opportunity, as well. In "big data" the important revelations can be found which we direly need.

* Also, big companies will find their data troves or already made their own. It stifles innovation if small, young companies are not allowed to find value in large amounts of data.

Article 13:

I urge you not to mandate that we hand over to machines to decide what can be uploaded or not. Machines cannot do this task, because they do not understand the context (e.g. satire) and uses covered by exceptions. But even more, this is

1) a censorship-like scenario, in which companies will rather block too much than being accused of not blocking forceful enough.

2) working towards centralisation of publishers, since implementation of such blocking behaviour is expensive and thus favoures the bug players. It will create even more of an oligopoly than we already have now.

Sincerely,

Nicolas Höning

In which I show my solution for listening to audio and watching videos at home in the digital way, with an acceptable quality (for most people), but for a very little costs and almost no hassle getting it to work.

Here's the short rundown on requirements I had and maybe you also agree on (which in turn means you might want to read on):

- All digital (sound and video files & streaming services)

- hassle-free setup - this is for the bulk of people who like me, are not in for weeks of research or tooling

- very low costs

- no vendor lock-in & use of standards where possible

- acceptable (not necessarily the world's greatest) audio quality

- remote control for everything (by mobile phone or actual remote controls)

And here is what what I actually use:

PlayOn Harddisk Media player 40 EUR

Google Chromecast 39 EUR

Raspberry PI as music box ~70 EUR

Envaya Bluetooth Audio Player 140 EUR

--------------------------------------------------------------------------

289 EUR

Why? - So here's the problem

Listening to music and watching videos at home. Everyone (in a modern household) wants to do this, of course. These days, however, the number of choices how to do it is vast, to the extent that researching what to buy can take forever. You can also built and configure a lot of things yourself, for instance using a raspberry pi and a custom-made luxurious soundcard. I understand that there were tough choices to make 30 years ago, as well, like how large the TV should be and if gold cables are really needed to get everything out of your audio signal.

I believe that today the number of sources for media has increased (and I'm only counting the digital cases for me anymore, really), because next to playing sound and video files from your hard drive there are now a number of relevant streaming services you might want to use.

On the hardware side, a lot of devices are on the market now, which all do something to play audio to you, in varying locations and quality. In fact, it's a young market with a lot of companies trying to develop exactly what we need. It's exhausting to do research there!

A lot of these new solutions are designed to lock you in and to upsell, something Apple is really good at. They want you to buy something wireless for every room in your house and also for on-the-go and what started at 200 EUR ends up at 1300 EUR and you need to keep using this system from now on.

The (my) solution

So I decided to lower my expectations with respect to audio experience, otherwise I would never install something modern. Otherwise, I believe I got pretty close to my requirements. Let's list what I bought:

Harddisk Media player

This little device plays almost any movie file you give it and it comes with a remote control (not pictured). I like USB sticks, which I can place there, but you could also connect a computer network cable and read files from some place in your LAN. I bought this a few years ago for around 90 EUR I believe (it's out of stock now), but nowadays the devices all seem to cost around 40 EUR. Connect to your TV via HDMI and it works.

Chromecast

Google makes this nifty little device which connects to your Wifi and then lets you show most of the content your phone screen would show, but streamed fromt he Wifi via the Chromecast to a connected screen, i.e. your TV. Your phone becomes a remote control. For streaming a few things which are well-supported (Youtube almost all of the time to be honest), this works really well. You have to help ot find your Wifi but that is very nicely done. Its usability breaks down though if you are streaming content via an app that is not supporting it or if you do not want the whole Google app stack on your phone (like I'm trying to these days), so I'm eagerly awaiting more innovation in this gadget area.

Envaya Bluetooth Audio Player

The audio speaker is the area where one can lose the most time and money. I settled for a bluetooth speaker I can put anywhere I want, has decent reviews about its sound (not great, but reviews from people with better ears than me -audiophiles- are tiring), good bass, an AUX in and can even charge your phone. You could pay less money here, but it is something you'll use a lot. Using the AUX in, it can even give your TV watching experience a boost. This works out of the box.

Raspberry PI as music box

Streaming music to the speaker from the phone or laptop has its limits. It really occupies your phone for example, and if you want to play files from a hard drive you cannot reach that from your phone. So one might want to use a music server, which can be controlled from laptop and phone. Enter Pi Musicbox, a ready-to-use open source software package which can run a RaspberryPi for this purpose. Basically, it runs Mopidy, an open source music server oftwear, but neatly wraps it for perfect fit on RaspberryPi. The nice thing about Mopidy is that there a many mobile apps written for it, so you can control the Pi Musicbox nicely from a mobile device. Pi Musicbox plays files but also streams web radio stations and gives access to streaming services (though I cannot vouch for the Spotify support yet, still trying to get the most out of it - however, I still can use my phone here). By the way, the 70 EUR I listed above roughly cover the Raspberry PI (about 40 EUR) plus necessary extra things like an SD card, a case, power supply and a 32GB USB stick.

This gadget is the only one requiring a little work, but not much, as you can see:

1. put the Pi Musicbox image on a SD card

2. edit the config file so it finds your Wifi when it boots

3. put in the USB with your music

4. boot

Algorithms should be made open ("transparent") to the public. I doubt, however, that we will live in a world where you can demand an explanation of what an algorithm did to you.

So, algorithms are a hot topic now. They change our world! You might remember how Facebook has an algorithm which manages user's news feeds, but it performed very poorly and kept trending fake news. Sad! Or the current discussions about self-driving vehicles being a danger to our safety - or even a danger to social peace because millions of driver jobs are endangered.

Let me make the side-note here that in both of these cases mentioned above, and many others which are similar, the awareness might be new, but the fact that algorithms do crucial work in our newsfeeds and our car safety systems is actually not that new actually. As a good example for this take banks, who have been using data mining algorithms to decide who should get a mortgage decades ago.

Lots of demands, but few incentives

However - the conversation is starting on what we should demand from entities (companies, governments) who employ algorithms w.r.t. the accountability and transparency of automated decision-making. The conversation is being led by many stakeholders:

- Think Tanks: For example, Pew research just published a report, in which expert opinions around this question where gathered: "Experts worry [algorithms] can (...) put too much control in the hands of corporations and governments, perpetuate bias, create filter bubbles, cut choices, creativity and serendipity, and could result in greater unemployment.".

- NGOs: With Algorithm Watch we now have the first NGO that dedicates itself to "evaluate and shed light on algorithmic decision making processes that have a social relevance".

- Governments: The EU General Data Protection Regulation (GDPR, enters into law 2018) has a section about citizens having "the right to question and fight decisions that affect them that have been made on a purely algorithmic basis".

There are many things being said, and I find this discussion more vague the longer I listen, but maybe the one specific demand all these stakeholders would get behind is this: Explain how the algorithm affects my life.

So it seems like a lot might be happening in this direction, but I believe very little will happen. Why?

- It is very costly to develop this feature (of explainable algorithms). I'll discuss why that is in more detail below. In fact, this feature is so costly, making it mandatory might actually drive smaller software development shops out of the market, as only the big players have enough manpower to pull it off.

- Customers will not demand it in everyday products, no matter what think tanks say. Open source software is not demanded by customers - it is being used because engineers love it.

- It will only become the norm in a specific type of algorithmic software if the product actually needs it. Consider a medical diagnosis software - the doctor needs to explain this diagnosis to the patient. Or the mortgage decision example where I believe lawmakers could step in and make banks tell you exactly why they will not give you a loan.

Explanations: decisions versus design

Algorithms should be "explainable", they say. However, there is no consensus to what that actually means.

In the light of complexity, I believe that what is explainable is not well-defined - while many people talk about explaining individual decisions, all we can really hope for in most cases is explaining the general design - which is much less helpful if our goal is to help people who have been wronged in specific situations. There can and should be transparency, but it might be less satisfying than many people hope for.

A new paper by Wachter et al (2017) makes a good point here that I agree with. They say that many stakeholders to the EU General Data Protection Regulation (see above), have claimed that "a right to explanation of decisions" should be legally mandated, but is not (yet). Rather, what the law includes as of now is a "right to be informed about the logic involved, as well as the significance and the envisaged consequences of automated decision-making systems". They also make a distinction between explanations for "specific decisions" versus "system functionality", and they state that the former is probably not "technically feasible", citing a book explaining how Machine Learning algorithms are not easy to understand.

I agree with their doubts here. Let me shortly dive into the feasability problems with explaining specific decisions and also broaden the scope a bit. To me, it comes down to complexity which is inherent to many IT systems that are being built these days:

- Complex data: Let's look at the Facebook news feed example: There is a lot of data going into this algorithm. The algorithm makes thousands of decisions for you while you surf and to fully explain one of them at a later time, you'd need a complete snapshot of Facebooks database at the very second the decision was made. That is unrealistic.

- Complex algorithms: Many algorithms are hard to understand for humans. Maybe the prime example here are machine learning algorithms. These algorithms are shown real-world data, so they can build a model of the real world. They use this model they built to make decisions in real-world situations. The model often is intelligible to human onlookers. For instance, a neural network (which is for instance used in Deep Learning) reaches a decision in a way that the engineers who "created" it cannot explain to you because the algorithm propagates information through this network many times over because it links back from its end to its beginning (so-called "back propagation").

- Complex systems: Finally, many algorithms might actually not live inside one computer. You might interact with a system of networked computers, which interact and all contribute their own part to what is happening to you. My favourite example here is a modern traffic control system that interacts with you while you travel through it, and at each intersection you encounter a different computer. I actually argued in a paper I published in 2010 that decentralised autonomic computing systems tend not to be "comprehensible".

So I believe (and agree with Wachter et al) that the demand to explain for any given situation how exactly the algorithm made a decision, is hard to hold. What can be explained is the design of the algorithm, the data that went into its creation and its decision-making and so on. This is a general explanation, which might be useful to explain to the public why an algorithm is treating problems in a certain way. Or it might be useful in a class action law suit. But it cannot be used to give any particular person the satisfaction of understading what happened to them. That is the new reality which probably already exists, but it has to sink in.

The notion of complex, almost incomprehensible algorithms has been brought up previously by the way. I want to mention Dr. Phoebe Senger, who noted that software agents tend to become incomprehensible in their behaviour as they grow more complex ("Schizophrenia and Narrative in Artifcial Agents", 2002), and of course the great Isaac Asimov who invented the profession of "robot psychologist".

I dragged it with me after my contract ended in 2014, but I actually made a finished product out of my dissertation after all and defended it at the TU Delft this past May.

It was a pretty formal procedure as you can see, but quite a meaningful end to , and actually a fun day in the end.

The dissertation itself is available here officially, but also hosted by me. I'll post the propositions here:

1. Both the need for low computational complexity of bidding and for effective capabilities of planning-ahead can be addressed in a market mechanism for electricity, that combines the trade of binding commitments as well as reserve capacity into one bid [this thesis, chapter 3].

2. In settings where a uniform price changes dynamically over time and where these dynamics are influenced significantly by consumer behaviour, the ability of a consumer to comprehend price patterns increases if a large part of the other consumers reacts to price dynamics in a manner similar to how he himself reacts to them [this thesis, chapter 4].

3. Dynamic pricing for electricity can effectively reduce consumption peaks, also under the two conditions that the retailer promises an upper limit on prices and designs his pricing strategy for profit maximisation [this thesis, chapter 5].

4. A heuristic control strategy for a battery which is limited in capacity can be designed such that it has the following three advantages: it reacts fast, it can reduce overheating of a connected low-voltage cable significantly and (if prices are dynamic) it can partly earn back the acquisition cost of the battery by performing revenue management [this thesis, chapter 6].

5. There is not one silver bullet to the problem of how to manage a smart grid in the most efficient way. Each setting has its own requirements, given by its own set of stakeholders and design objectives.

6. To have a healthy and happy toddler is not to a small degree a matter of luck.

7. For the foreseeable future, concerns about privacy need to focus on computers and mobile phones, which directly expose political views and social contacts of their owner, rather than smart meters, which expose less meaningful data.

8. If users do not comprehend the reason why a novel technology interacts with them in the way it does, it will not be adopted, even if it is useful and resource-friendly.

9. Electricity grids are the largest man-made synchronous machines, and economies are the most complex man-made systems. To combine them leads to much more complexity than is commonly assumed, and the resulting systems will therefore never be completely understood.

10. In a referral network, where agents base their opinion about the performance of a service on those of other agents, it is beneficial for users if the agents forget old information at a comparable rate. [N. Höning: "Discounting Experience in Referral Networks", Master thesis, Vrije Universiteit (2009)]

I also was asked to write a very short summary, which might be useful here:

New developments require us to reconsider how electricity is distributed and paid for. Some important reasons are renewable energy, electric vehicles, liberated energy markets and the increasing number of smart devices. How we deal with these dynamics will affect important aspects of the upcoming decades, for example transportation, home automation, heating/cooling & climate change.

In order to keep the security of supply high and price fluctuations within acceptable ranges, we need to continuously make the decisions who will supply or consume electricity, at what price and at what time. The resulting complexity should not grow too high for small participants, otherwise novel technology might not be adopted. This dissertation contributes market mechanisms and dynamic pricing strategies which can deal with this challenge and reach acceptable outcomes in four relevant problem settings (mostly situated in lower levels of the electricity grid).

The most critical problem to address are intervals with very high power flow, or with high differences between demand and supply which need to be evened out. Such “peaks” can result in steep price movements and even infrastructure problems. We study decision problems that will arise in expected scenarios where peaks reduction becomes important. In order to arrive at an efficient and usable system, this research specifically looks into

Encouraging short-term adaptations as well as enabling planning ahead (of generation and consumption) within the same mechanism.

Ensuring that small and/or non-sophisticated participants can still take part in mechanisms.

Letting smart storage devices contribute to network protection.

We develop agent-based models to represent expected settings and propose novel solutions. We evaluate the solutions using stochastic computational simulations in parameterised scenarios.

A similarly high-level overview was given by me in a short presentation before the defense.

Last week I attended DockerCon Europe 2014 which luckily happened in Amsterdam this year. I got my finger on the pulse of important developments in the ongoing evolution of the internet and a just-healthy dose of tech-optimism from current Silicon Valley prodigies. I thought I'd share my five favourite slides with a little comment on each.

The internet is still a technological Wild West. So many talented people. So much change and progress in technology each year. So many things you need to know to deliver something that doesn't break for some reason. So much still to achieve. Docker provides a usable (fast and well-documented) way to bundle into a container some things that you know are working, then upload this container on any server and expect this functionality to be up and running and simply work as you expect. It wasn't a DockerCon talk, but I like this short breakdown of what holds us back and why containers can help a lot (9 slides). At Softwear, we are using Docker both in the CI workflow and partly in production (by the way, let me know if you want to do influential UX or QA engineering work for us). The slide above shows the way of thinking going forward - build stacks from things you know will work and will also work together. Like Lego. Then make these light-weight stacks (your actual web applications) work together in creative ways. The current term for a picture like this is "Microservices". This slide from Adrian Cockcroft (who spent six years at Netflix) makes the point how useful Docker will be in more detail. Adrians presentation (all slides) probably generated the most food for thought. My favourite line (slide 26) is:

DevOps is a Re-Org!

meaning that software developers are taking over system operator/admin - tasks in any company which does not actually run data centers (which is becoming a very concentrated business nowadays).

Next, we get to some Silicon Valley - style notion of how suspected technology breakthroughs will change society. Docker Inc. CEO is asking here:

What happens when you separate the art of creation from concerns about production & distribution?

Subtly, there is a picture of the printing press. He wants to say that creators of web applications soon might need to worry less about how they will deploy their app such that it will work, as the "container revolution" will make this trivial. Of course, the web 1.0 kind of already did that for content. However, I can see how lowering a crucial technological barrier for inventing useful web applications can really be significant to innovation. We have a lot of content and ways to get it out there, now let us see what cool applications can be built to assist people everywhere in the world. And although we at this conference were a bunch of rich white males, poor people are hopefully getting access to cheap smart phones soon (Africa is a good example). I, too, find these times exciting. But I was glad to return to my normal life, and to cool down a bit.

This slide gives an indication of the scale of change we are seeing in the software world. Henk Kolk from ING told us how this large bank sees itself as a technology company now and removed everyone from their large IT team who can not program at least something. Being a programmer means being in demand right now but as his slide says as well, speed is key from now on. If you don't get on board with this new way of having tight control over your stacks, together with being totally flexible towards switching technologies, all you will be doing is to jump from one sinking ship to the next. I got both excited and chilled, actually.

An interesting take away of the current weeks is how Open Source currently works. Big money has gotten in on it, because in the software world, you have to invest in widespread and sustainable technology while also having a modern stack. This only works when an open source community carries the technology. Even Microsoft is coming around in major ways. Companies are actually employing the best open source programmers directly to stay on top of things. The industry is a bit different than other industries in this regard (hopefully actually leading the way). On the slide above, Docker Inc. CTO Solomon Hykes is giving us his current set of the rules that he thinks make a technology successful these days. As a consequence, Docker got some interesting new functionality (announced on the first conference day), but it was kept out of the core code - "Batteries included, but replacable".

But it is also not all agreement and happy collaborative coding. No, sir. The latest trend is that a company or a startup guides an open source technology. This makes progress faster and stable, but it can easily break if you annoy the comunity. Node was just forked, AngularJS is having a community crisis. The Docker community is also weary of the Docker startup Docker Inc. In fact, Solomon Hykes spent a lot of his time on stage at DockerCon Europe 2014 to discuss how he wants to succeed as a steward of the Docker technology, using a process he calls "Open Design" (see all slides here). There is an Open Design API through which all feature requests have to go, thus separating people acting on behalf of Docker Inc. from people acting on behalf of the Docker Open Source project - no matter which company pays them at the moment. They are creating and updating their own constitution which deals with this construct as we speak (of course in structured text files, so if you suggest a change, you submit a pull request). So the message of this slide above is simple and compelling:

The real value of Docker is not technology. It's getting people to agree on something.

Replacing "Docker" with any standard, this is something you could also have said during any time of rapid development and change. Interesting times.

P.S. There were some really smart people at this conference, building amazing companies and systems. We can expect to see a lot, e.g. from the Apache Mesos project. I could have chosen more technical slides for this list, but it would have taken me longer to explain why I fancy them. A lot of them were also quite intimidating, actually.

Today, I gave a talk at CWI on how to become more efficient with complex computations in our scientific work. I discussed how I have approached the need for distributed computation (to scale up towards larger and more complex problems) and the problem of organising the scientific workflow when doing experimental work.

I promised listeners that they would get

- hands-on information on getting results from large-scale, "embarrassingly parallel" computations,

- ... without actual parallel programming,

- ... little ssh effort

- ... and using the programming language of your choice.

- Plus, some tools to keep track of experiments and data.

where I would (not exclusively) mention the tools I have written, StoSim and FJD. I was happy to have a turnout of 16 people and I think we spent an interesting hour.

Here are the slides (direct link to PDF):

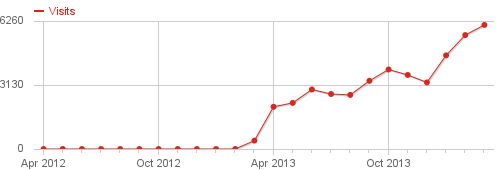

I'm quite happy with how the visits to this website of mine have developed over the last year. Here are the monthly numbers:

Btw, since March 2013 I use Piwik for my visitor analytics and am very happy. You should also be happy about that, because I don't store your metadata on Google's servers, only on mine.

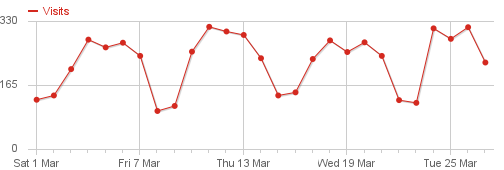

An average week looks like this:

You can tell that people mainly come to my site on workdays, weekends are rather quiet. Why is that?

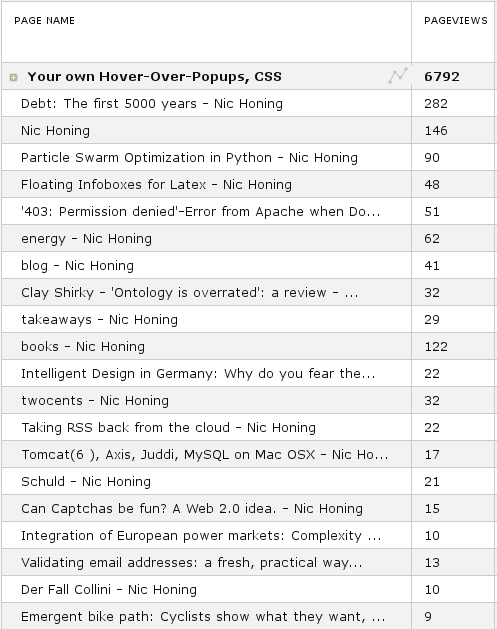

Well, in 2007 or so, I wanted to have javascript tooltips, or "popups" to display context to links when you hover them. I wanted to style them like I wanted. So I wrote a small script. It is very simple but the page explaining it creates almost all trafic to this website. Look at this example of page view numbers ("page views" are the amount of loaded web pages, where "visits" consist of one IP address performing one or more page views in one session) from (I think) one week:

The trend is clear. Around 300 people come to that page about the little javascript thingy every day, and not much else I write gets attention. And as you can tell from the long list of comments there, many people use this javascript thingy in the websites they build. I actually get some satisfaction in making them happy, so I answer many of their questions and actually improve the codebase once in a while.

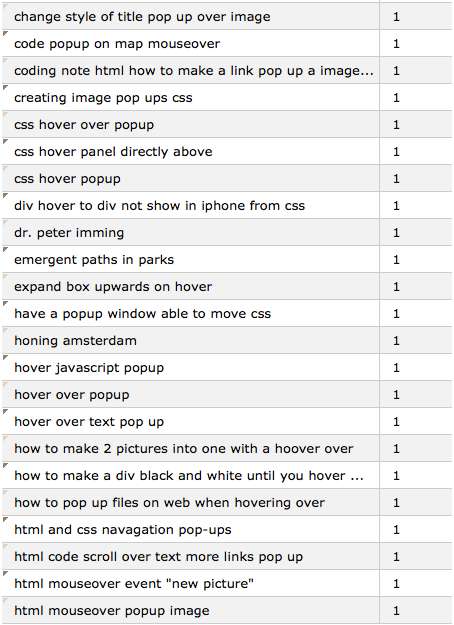

But here is the problem: There are many other similar scripts for this out there, and I never get mentioned when experts list libraries for such a feature (I have been mentioned in two or three forums, I think). Why do people keep finding this? I think the dirty little secret is that I called it a "popup", while the technically more correct term is "tooltip". Look at search queries that people used when they came to this page:

There you go. Me and a significant amount of people use the slightly wrong term, and that's what drives traffic here. Accidental linguistic match-making in cyperspace. Positive things come from this. The traffic probably improves my Google ranking a lot. Our interactions help my users get something done and make me feel some fulfilment. It's a weird world.

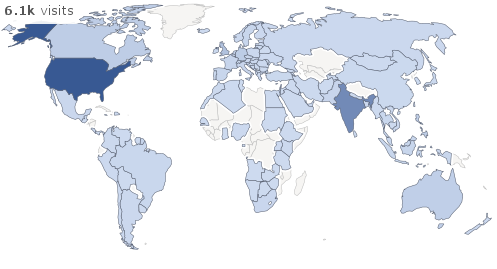

Speaking of the world, here is where my visitors are from Mostly english-speaking countries where a lot of web development happens:

The European Commission just announced a new indicator to measure innovation in its economy. I think it shows how the bureaucrats favour big companies, want to make it easy for themselves and hold a simplistic view of how innovation leads to positive net effects for the economy.

To quote, here are their new ingredients to the indicator:

Technological innovation as measured by patents.

Employment in knowledge-intensive activities as a percentage of total employment.

Competitiveness of knowledge-intensive goods and services. This is based on both the contribution of the trade balance of high-tech and medium-tech products to the total trade balance, and knowledge-intensive services as a share of the total services exports.

Employment in fast-growing firms of innovative sectors.

- First, the view that patents are important favours big established companies over small innovative ones. Small innovative software companies are often better off to just move on and not waste their time trying to get a patent. And don't forget that (luckily) in Europe it is difficult to patent software. So comparing to the rest of the world here is even more questionable. Patents are also often used by companies for no other purpose than to block out other companies, which is maybe good for Europe (only if a European company bocks out a non-European one), but it is hardly helping substantial innovation in the economy to form. Another point: I think the EU has the view that innovation has this standard way to lead to positive impacts for everyone: Someone (a big company, see above) invents something, then they patent it and then hire people to develop the invention. As they start producing, they will hire other companies as subcontractors, and so the positive effect trickles down from the inventors to the others. I think that this sometimes happens, but only for big companies. This view is neglecting large parts of the economy. Innovation often happens in non-formal open spaces and/or in collaboration.

- And about the second ingredient - simply hiring people in "knowledge-intensive activities" gives you points, no matter their actual effects on innovation. That makes it easy to count, but what are we measuring? Are we measuring that these employees are doing something useful with their knowledge-intensive work or are we simply celebrating that people are getting paid to think? For example, I'm sure the banking and lawyer industry has lots of jobs that would count as knowledge-intensive. A reason to celebrate them? Also, government spending on research gives a country points here, no matter what is actually researched.

- The third ingredient is the only one that makes at least some direct sense to me. One can compare the contribution of high-tech and high-knowledge activities to other services and get some information out of it (albeit my point about the second indicator holds here, as well).

- The fourth ingredient is based on the assumption that fast-growing companies must be more innovative than other ones. Might sometimes be true, but often probably not. Growth != innovation. A dangerous way of thinking in my opinion. Most often, rapid growth is merely the ability to attract capital - capital that is interested in rapid returns. The substance below the company is indirectly related to the jope on rapid returns, but often not necessary for this capital attraction effect (as the last couple bubbles should have tought us). If your concept of innovation is to enable turnover, it has not been formulated in society's best interest.

I think that behind this formalism there is a dangerous fan-boy attitude toward big companies/big finance and telling the story that they like to tell - an effect of lobbyism. What about small companies? They'll have an even harder time being "sexy" to EU bureaucrats now.

There is so much more happening in an economy, which is one of the most complex systems on earth. One example that comes to mind: what about innovation that isn't directly making money but enabling others to make money? For instance, what if people create an intelligent new way of doing things, but don't directly sell this new way? Maybe they open-source their powerful idea and run a successful little consultancy based on their newly-found reputation. With their help, a part of the European economy considerably improves, in more than just one way (they provde direct services and they shared their knowledge to enable many others), but without the bureaucrats taking notice - their innovation is flying completely under the EU radar.

I know it is difficult to "measure innovation", and that is why I have not yet come up with a better way to measure it. However, it seems to me that to not measure would have been better than to measure like this. A counter-question: why do we actually need this number? It's as if the numbers we have (like GDP) aren't already alchimistic and misleading enough.

I think we live in times where both governments and citizens of western democracies are searching for a new role in their relationship. My point today is only this: the court systems are still working to some degree, and they seem to me a valuable battling field in this search. Citizens should actually pay attention and support causes fought in their name.

First, what do I mean with this "search" for new roles? It is not as easy as some pundits had described, who after 1990 simply awaited the state to take a step back. After some years of perceived openness, where capitalism had supposedly "won" and everyone could relax and enjoy, I think we now rather see the state taking a stronger stand. One reason might be that governments feel threatened by globalization and the internet, creating too much openness and feedback loops for them to feel in control. Another might be rising tension due to the resource front, as energy supply gets tight and climate change restricts what we might want to do with resources we still can get our hands on. Or, more general, this is just the usual dance between governers and governed, a step forth and a step back, and we will only see what it all meant after this song ends.

Anyhow, we are beginning to discuss what "freedom" should mean, again. And when I say discuss, I mostly mean "battling out". Emotions are running high and claims are bold and strong. Governments simply create new facts of what is legally possible, while the Chinese lean back and rub their hands.

Some citizens are being taken to court, others decide to let their quarrel with the government escalate to court. In most of these government vs. someone trials, important definitions are being made, and, as far as the court is still functioning, the outcome of these battles are important for (re)defining freedom, more important than, say, a general opinion piece in The New York Times.

What is also new today is that it is dead simple to support the legal funds of people whose cases you find interesting, via the internet. I believe if you are interested in funding societal change, investing in the legal defense of someone is (to use the lingo of our time) a sound investment. The amounts I gave are by no means to brag about, but I have begun to give to such individuals, and I think I should increase this activity. Here is a short list of who I remember supporting:

There are heroes out there fighting for you right now and it is easy to help them.

* Actually, I only gave some monetary support to Wikileaks as an organisation, after the Collateral Damage video. Criminal investigations into Assange started afterwards and only then it became clear that most of the financial funds of Wikileaks seem to have gone towards his legal defense.